Ville Pitkäkangas

Cyber threats are evolving and becoming increasingly sophisticated, posing a risk to individuals, organisations, and nations. This calls for novel methods of cybersecurity intelligence, whose domains include the analysis of social media and news feeds, where artificial intelligence (AI) can detect possibly harmful content, such as potentially threatening situations or fake news articles.

Artificial intelligence can be used for analysing potential cyber threats in multiple ways. It can be trained to find content suggesting dangers in online images, such as photos posted on social media. Another application is text analysis on posts and comments shared on social networks and news items published on websites. The photo analysis is typically based on an artificial neural network (ANN) that studies the image as a whole and in parts while comparing the results to what it has learned. If the AI finds that the photo resembles other pictures associated with threatening situations, it classifies the image accordingly. Text analysis is similar to image analysis in that the material, such as a news article, is split into elements subsequently studied and compared to what is already known, and the result is categorised as what fits best. There are many AI-based techniques for this purpose, collectively called natural language processing (NLP). In this subdomain of AI, parts of speech and parts of a sentence, the context, and co-occurring words can be considered for every studied word in a text that is analysed. An AI program, regardless of its purpose, is called a model.

Business Finland funds Project Alpha which aims to create tools for the authorities to improve their situational awareness and knowledge-based management (Centria 2022). In the project, Centria University of Applied Sciences developed a prototype demonstrating the capabilities of artificial intelligence for detecting fake news and identifying social media posts related to potential threats.

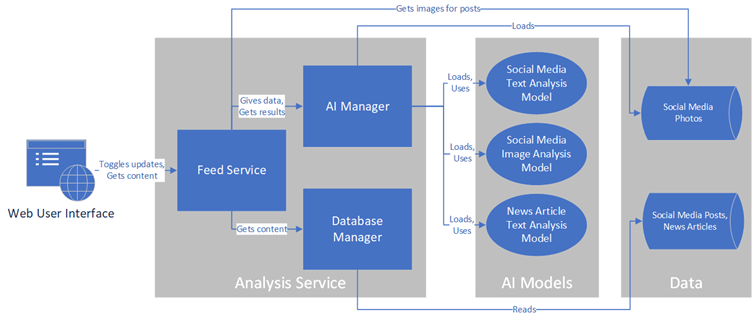

The prototype consists of a web-based user interface showing the news and social media feeds with AI-based classification results, the service conducting data analysis and classification, and databases containing articles and posts the AI will analyse. (Figure 1.)

The prototype analyses data with three AI models. Two of these are applications of NLP and classify news articles and text of social media posts, respectively. The third model categorises photos of social media posts. The dataset for the prototype consists of social media content and news articles. The former was simulated from text generated manually in this project and photos collected in other projects, while the latter was obtained from an open-source project (Kumar 2019). In the dataset, photos with any detected activity were labelled as threat-related and others as non-threat-related when training the AI.

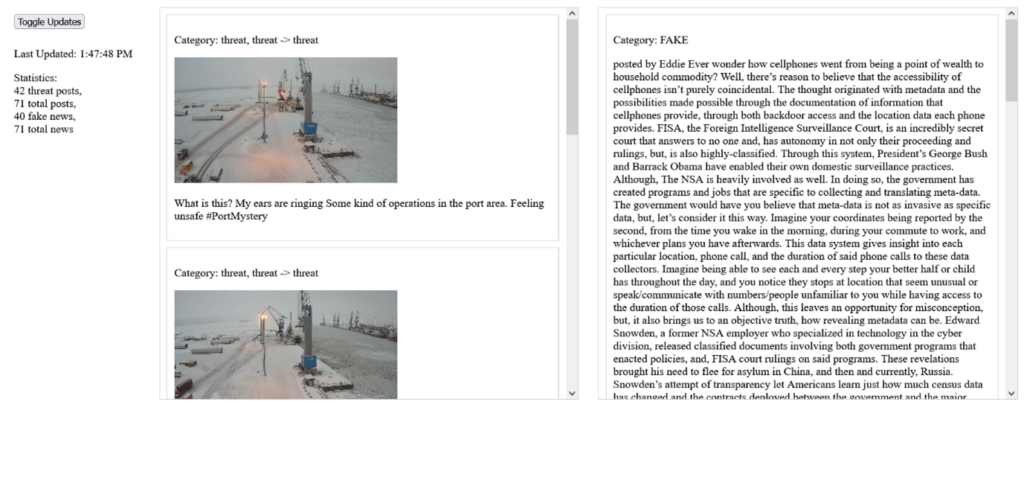

The web interface shows the user what kind of content the AI has analysed and what it considers as being related to cyber threats. The application can be paused or resumed by pressing an on-screen button that stops and starts the continuous update and the analysis of the data feeds for news articles and social media posts. Statistics provide additional information: How many total posts and news articles has the AI analysed? How many of them were probably related to threats? (Figure 2.)

The prototype developed in Project Alpha is simple but can demonstrate that AI might be an effective cyber threat detection tool. The created software detected possible threats correctly in over 90% of the cases in the experimental dataset. Further analysis of the classification results of the AI could reveal additional information about the attacker and their intentions, motivations, capabilities, and targets. However, the quantity and quality of the data the AI is trained on profoundly impact its accuracy and reliability, so plenty of material from real-world situations is required to create a digital cyber intelligence expert who can navigate even unseen situations with reasonable precision – the machine cannot tell threats from non-threats without a solid idea of what constitutes each category.

References

Centria. 2022. Project Alpha. Available at: https://net.centria.fi/en/project/project-alpha-2/. Accessed 4 March 2024.

Kumar, S. 2019. Big_Data_Project: Fake News Detection. GitHub repository. Available at: https://github.com/san089/Big_Data_Project. Accessed 13 March 2024.

Ville Pitkäkangas

TKI-kehittäjä

Centria-ammattikorkeakoulu

p. 040 193 8974