Mitha Rachel Jose

Heikki Kaakinen

The AgroTeknoa project is carried out in the area of Central Ostrobothnia and the southern part of Northern Ostrobothnia. These areas are one of the most significant livestock production regions in Finland. The project focuses on the introduction of new agricultural technologies and provides knowledge and user experience on new machinery, equipment, and production methods in the field of livestock production.

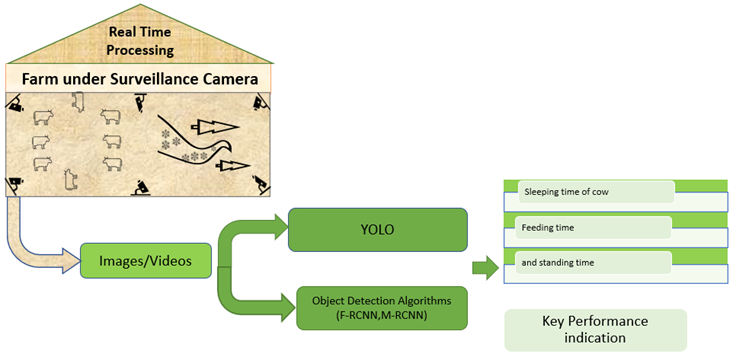

The objective of the project is to improve the eco-friendliness, efficiency, and profitability in the field of agriculture and livestock production through digitalization. The target group of the project is agricultural entrepreneurs and agricultural machinery contractors of the area. This article discusses an application to analyze cattle under surveillance camera with the activities in the barn such as resting, feeding, standing and so on.

Introduction

Object detection is the method detecting a particular face or an object from a larger image or a video. To distinguish, recognize, and classify images from its origin is not an important or a tedious task by a human eye. However, it is a hard task for a machine to understand objects in real world circumstances because they are taken based on different factors like shapes, size, color, and texture (Uijlings 2013). The recent developments in the area of artificial intelligence and computer vision trends with wide solutions for object detection and tracking technology. The proposed approach for detecting cow movements or activities are shown in the figure 1.

Traditionally, this kind of models are developed using image processing methods, machine learning algorithms, or combinations of the two. Image processing methods extract features from images via image enhancement, color space operation, texture/edge detection, morphological operation, filtering, etc. Challenges in processing images and videos collected from animal environments are associated with unpredictable lights and backgrounds, difference in animal shapes and sizes, similar body language of animals, animal occlusion and overlapping, and low resolutions. These challenges considerably affect the performance of image processing methods and result in poor algorithm generalization. This challenge can be achieved by using object detection system.

Object Detection

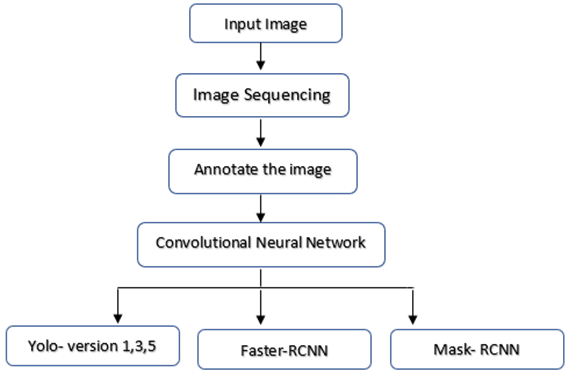

Object detection methods are applying to real-world objects such as people, animals, and for threatening objects. Object detection algorithms use a wide range of image processing applications for extracting the object’s desired position. It is commonly used in applications in the areas of retrieving images, security problems and so on (Kim 2021). There are different image classification methods used to extract the features of the images and are explained in the below section. The block diagram representation of the method is shown in figure 2.

Yolo means You look only once. This is an algorithm that utilizes neural network to generate real time object detection. Yolo is established on its speed, accuracy, and learning capabilities. There are lots of applications done with YOLO like detecting people, traffic signals, parking meters, vehicles and so on. This algorithm utilizes convolutional neural network to find the objects in real time. There are different series of versions for Yolo varying from version 1(v1) to version 5(v5). The Yolo algorithm works on basically three techniques such as residual blocks, bounding box regression and intersection over union (Ge 2021). Faster R-CNN is an object detection model that enhances on Fast R-CNN by applying a region proposal network (RPN) with the convolutional neural network (CNN) model. The RPN shares full-image convolutional features with the detection network that simultaneously predicts object bounds and scores at each position. Mask R-CNN is an object detection model based on deep convolutional neural networks (CNN) developed by a group of Facebook AI researchers in 2017. The model can return both the bounding box and a mask for each detected object in an image (Inbaraj 2021).

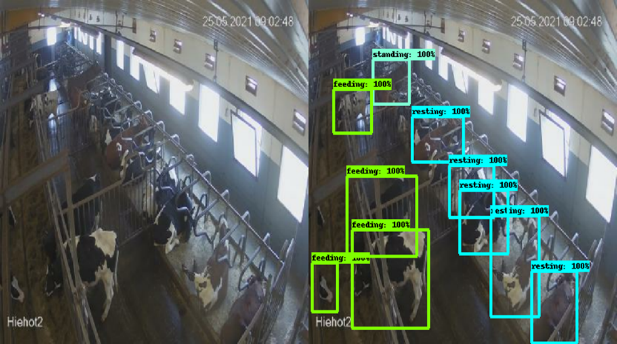

The above-mentioned methods help to detect and classify the cow’s image as resting, feeding, or standing positions. The entire model is implemented by considering the cattle images under camera surveillance and the results are compared with several state-of-the-art techniques.

Results and Discussions:

The results of different image classification and segmentation approaches are shown below. These results help the farmer to identify the position and activity of each cow on real time monitoring system. Convolutional Neural Network are applied to wide area for analyzing images. One of the challenging features was a convolutional neural network application. The classification model takes an image as input and predict for the whole image. The image segmentation using convolutional neural network is done by the classifying every pixel into a single hot vector thereby showing the class of that pixel resulting in a deep segmentation mask for the entire image (Klaas Dijkstra 2021).

The first applied method to the cattle field image was the YOLOv1 to YOLOv5. Each of the version shows slight variations in the results and the better performance was with the version 5 and the results from the version 5 are showing in the figure below. YOLO architectures directly estimate the bounding box and the class of individual object instances in images with everyday activities. YOLOv5 aims especially on small object detection. The instance segmentation is considered as a sequence of object detection and segmentation (Thuan 2021). Faster R-CNN is one of the advancements of the CNN and it is developed by increasing the speed of the RCNN. The RPN building block of RCNN helps to take different areas from the training. After training through the Convolutional network, the identified location is used for the purpose of feature mapping. This process results in the high performance for the feature identification. This process helps in reducing the computation cost and helps for real time assessment. In addition to this, the Faster R-CNN has another module called adaptive scale which is used for adapting the changes coming in the image. The adaptive sale module also tunes the network to improve the accuracy of the classification from the image. Therefore, the Faster-RCNN ensure an ideal recognition rate and can be used for the activity prediction of the cattle (Sonal Deshmukh 2022).

The image classification from the cattle field using YOLOv5 is shown in figure 3a, Faster-RCCN in figure 3b and Fast-RCNN in figure 3c.

Conclusion

The study of YOLO versions, Faster-RCNN results in the high performance for the feature identification and helps in reducing the computation cost and helps for real time assessment. This study helps farmers and entrepreneurs to understand and improve the knowledge of new digitalisation tools available in the market to improve agriculture profitability and livestock production. The study also aids customers to improve their own observations and decisions to choose the right digitalization tool for their business.

References

Ge, Z. L. 2021. YOLOX: Exceeding YOLO Series in 2021.

Inbaraj, X. A.-H.-G. 2021. Object Identification and Localization Using Grad-Cam++ With Mask Regional Convolution Neural Network. Electronics, 10, 2079-9292. Available at: https://doi.org/10.3390/electronics10131541

Kim, K. 2021. Multiple Object Detection Algorithms. Available at: https://www.iaacblog.com/programs/object-detection-algorithm/.

Klaas Dijkstra, J. V. 2021. CentroidNetV2: A hybrid deep neural network for small-object segmentation and counting. Neurocomputing, 423, 490-505. Available at: https://doi.org/10.1016/j.neucom.2020.10.075.

Sonal Deshmukh, A. K. 2022. Faster Region-Convolutional Neural network-oriented feature learning with optimal trained Recurrent Neural Network for bone age assessment for pediatrics. Biomedical Signal Processing and Control, 71, Part A,1. Available at: https://doi.org/10.1016/j.bspc.2021.103016.

Thuan, D. 2021. Evolution of YOLO Algorithm and YOLOv5: The State-of-the-art Object Detection Algorithm. Oulu University of Applied Sciences, Thesis.

Uijlings, J. V. 2013. Selective Search for Object Recognition. International Journal of Computer Vision, 104, 154–171. Available at: https://doi.org/10.1007/s11263-013-0620-5

Mitha Rachel Jose

RDI developer

Centria University of Applied Sciences

Tel. 040 181 1683

Heikki Kaakinen

RDI specialist

Centria University of Applied Sciences

Tel. 040 575 4786